西达赛奈医院和斯密特心脏研究所的人工智能专家创建了一个包含超过100万份超声心动图(心脏视频超声)及其相应临床解读的数据集。利用该数据库,他们开发了EchoCLIP,这是一种强大的机器学习算法,可以“解读”超声心动图图像并评估关键指标。

《自然医学》杂志发表的一篇论文描述了 EchoCLIP 的设计和评估,表明使用 EchoCLIP 解释患者的超声心动图可以提供专家级的临床评估,包括心脏功能评估、过去手术的结果和植入的设备,并可能帮助医生识别需要治疗的患者。

EchoCLIP 基础模型还可以跨多个视频、研究和时间点识别同一个患者,并识别患者心脏的临床重要变化。

“据我们所知,这是在超声心动图图像上训练的最大的模型,”该研究的主要作者、医学博士大卫欧阳 (David Ouyang) 说道,他是斯密特心脏研究所心脏病学系和医学人工智能系的教员。

“许多先前用于超声心动图的人工智能模型仅基于数万个样本进行训练。相比之下,EchoCLIP 在图像解释方面独具特色的高性能,是其基于比现有模型多近十倍的数据进行训练的结果。”

欧阳补充道:“我们的研究结果表明,大量的医学影像数据集和专家验证的解释可以作为训练基本医学模型的基础,而基本医学模型是一种生成人工智能。”

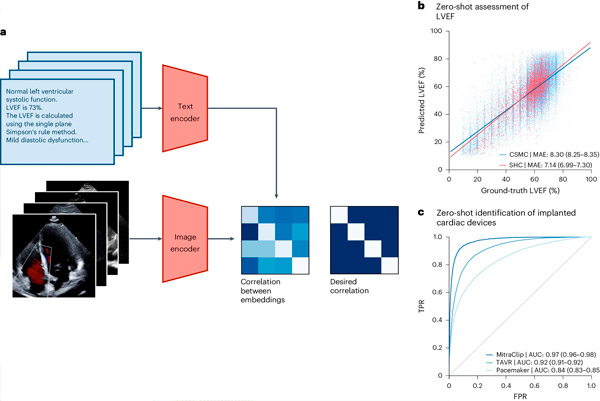

EchoCLIP 工作流程。来源:《自然医学》(2024)。DOI:10.1038/s41591-024-02959-y

他指出,这种先进的基线模型很快就能帮助心脏病专家通过生成心脏测量估计值来评估超声心动图,识别随时间的变化和常见疾病。

研究团队创建了一个包含 1,032,975 个心脏超声视频及相应专家解读的数据集,以开发 EchoCLIP。该研究的主要发现包括:

- EchoCLIP 在通过心脏图像评估心脏功能方面表现出色。

- 基线模型能够从超声心动图图像中识别植入的心内装置,例如起搏器、二尖瓣植入物和主动脉瓣植入物。

- EchoCLIP 能够在研究中准确识别独特的患者,检测出临床上重要的变化(例如以前的心脏手术),并能够对超声心动图图像进行初步的文本解释。

斯密特心脏研究所心脏病学部主任、医学博士、公共卫生硕士克里斯蒂娜·M·阿尔伯特 (Christina M. Albert) 表示:“基础模型是生成式人工智能的最新领域之一,但大多数模型没有足够的医学数据来用于医疗保健。”

未参与该项研究的阿尔伯特补充道:“这种新的基线模型将用于超声心动图图像解释的计算机视觉与自然语言处理相结合,以增强心脏病专家的解释能力。”